Picture archiving and communication systems (PACS) still stand as a cornerstone to medical imaging workflows, driving the technological breakthroughs which enhance clinical decision and make medical imaging data easier and faster to access and process.

A perspective of scale is important here: a traditional PACS archive may serve a few users in a small health center, but large scale architectures also exist, serving hundreds of hospitals in a wide region. Storage requirements often increase quickly, leading to a regular maintenance of storage devices and mitigation strategies which could make long term history harder to fetch. Considering the risks of resource saturation in medical imaging archives, it is of utmost importance for these systems to evolve in multiple factors, including how imaging data are retrieved and transferred, for maximum throughput, efficiency, retention, and temporal availability.

Data compression stands as a long-lived cornerstone of our information systems, still presenting noticeable novelties in the past few years. In spite of that, many computer systems today have not kept on par with this evolution. In medical imaging, where these technologies could make a remarkable difference, adoption is aggravatingly still under way. This article aims to give a brief explanation for why data compression continues to be important in medical imaging, as well as show an idea of what future medical imaging systems may attain based on today’s state of the art.

The importance of data compression

Data compression is far from a new concept. In its simplest form, it applies a trade-off between computational resources and payload data size. And this trade-off was very alluring a few decades ago, where CPU speeds were heavily increasing and network bandwidths were several magnitudes below that. A lot has changed since then, but the truth is that, even as we enter the 2020’s, one does not need to visit a developing country to find suboptimal internet connections. Even suburban health departments in central Europe may be coping with less than 5 Mbps of download and/or upload to the Internet, which can severely deteriorate screening work if not properly mitigated.

To serve as an example of the problem, consider a standard mammography study with cranio-caudal and mediolateral oblique projections (2 ⨯ 2 = 4 images). Assuming a high resolution of 3300×4090 per image and a bit depth of 16 bits per pixel, sending the full study to a remote viewer with no compression would have required the archive to upload approximately 104 Megabytes to the workstation. At the speed of 5 Mbps, this requires well over 2 minutes, which is more than enough for a doctor to give up and work on something else. The same payload can be compressed to less than 20 MBs, without losing any bit of information in the image, which could then be sent in 32 seconds under the same connection. These ratios can improve a lot more, if some form of information loss is admitted. A very high quality study in JPEG extended can retain the image’s fidelity. However, this trade-off might not be acceptable, especially in mammography. 5 MBs would arrive at the workstation in less than 9 seconds under this low bandwidth, which would be more bearable, but could this be done without sacrificing quality? This would be possible in lossy image formats, but very few doctors would be willing to admit it for good reasons.

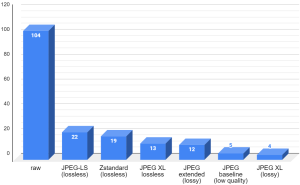

The chart below shows the compressed sizes of a mammography study in Megabytes against an assortment of compression formats, where raw is the DICOM data in its native, uncompressed form.

Note: These numbers will of course vary depending on the data set and compression parameters. It is not meant to be portrayed as scientific output, but it stands nevertheless as a rough demonstration of what different compression formats may attain on a single study.

The first noteworthy remark from this chart is the possibility to compress this study to 13% of its size without any data loss whatsoever. It may also be very surprising that the study can be compressed with minimal loss, to as low as 4 MBs. This is using JPEG XL, the next generation image encoding format from the JPEG committee, with support for both lossy and lossless compression. Unfortunately, this is not yet available in DICOM-compliant systems, but if it was, a bandwidth this low would suddenly become less of a problem.

As imaging acquisition devices improve, so will the overall quality and resolution of captured files increase. In clinical pathology, where workflows are shifting into a full digital environment, each scan will typically take up several Gigabytes in total, as a consequence of its very high resolution and composition at different levels of detail. This too suggests that medical imaging data compression is more important than ever, and as such, it should keep up with the advances seen in the field.

Generic data compression

Not all data in medical imaging is made of values in a plane or space. Any sort of file or data payload, such as a JSON file of search results, may be subjected to lossless compression, thus reducing network traffic and storage occupation without losing information. However, it is worth noting that compression is not always desirable.

It is often overlooked that the DEFLATE algorithm, the compressed data specification used by ubiquitous file formats such as zip and gzip (hence the .gz extension), was announced as far back as 1996. Despite its age, many tools and frameworks in the present time indirectly resort to this algorithm whenever compression is desired. In more severe cases, compression is applied in situations where it was not required at all, and would therefore introduce an unnecessary computational overhead to the system.

Modern day computers boast characteristics which are significantly different from the ones in the 1990’s. Persistent storage space and computer network speeds have both increased exponentially in their own way. Computers have also scaled horizontally much more than they have vertically: while the increase of processor clock frequency has mostly stalled in conventional machines, processors now have multiple physical cores and a larger number of parallel computing capabilities. This means that gzip may not only be inadequate for your use case, but may also cripple performance.

Fortunately, there are better alternatives to gzip that do not involve giving up on compression entirely. In emphasis, Zstandard stands out as the one achieving the best encoding and decoding speeds with similar compression ratios among existing solutions. It also has an assortment of benefits, such as high flexibility in speed-compression trade-off and dictionary-based compression, which makes it a very appealing format for controlled general purpose data compression. Our own benchmarks measuring the differences between on-disk DICOM storage with gzip and Zstandard compression show better metrics overall, with respect to both storage and performance. Even at a low compression level, Zstandard exhibited a reduction in storage occupation by 4% relative to gzip… with a 5 times storage speed-up on average! This makes another idea of what existing systems are already missing out by sticking to old data compression methods.

Image compression

In medical imaging systems, it is typical to store and visualize data with a spatial (and sometimes temporal) relation, hence the association with the concept of “image”. They still tend to differ from the general images found outside this domain, but they may still be represented in general purpose image formats, even without losing information. That is, with the right choice of image format and encoding properties.

The subject of image compression looked somewhat dormant a decade ago, but recent years have brought us new image formats which already have an impressive amount of integration with existing software, with emphasis on Internet browsers. WebP is widely supported in modern browsers. AVIF files can be opened with the latest versions of Firefox and Chrome. JPEG XL is also closer to having this integration in browsers.

On the other hand, their integration in medical imaging systems is far from a drop-in replacement. For one, not all of them are suitable for medical imaging: WebP does not support more than 8 bits per channel, thus becoming naturally excluded for use in CT and MR imaging. And ultimately, for these new image encodings to be employed in standard DICOM, they must be encoded into new transfer syntaxes. Standardization of transfer syntaxes requires a careful validation process, and it is reasonable for most vendors to be reluctant to support and maintain private transfer syntaxes until that happens. The good news is that the DICOM compression working group is aware of the recent state of the art, and efforts towards proofs of concept are on the way.

Until then, should storage and network load be more than what is desired, the options available for image compression depend on the level of support for them at each component in the PACS.

- The acquisition devices may already support some kind of compression through standard transfer syntaxes. JPEG 2000, JPEG-LS or JPEG Lossless are often the ones mentioned when seeking to support lossless image compression. If high resolution multi-frame captures are common, such as in fluoroscopy, MPEG based transfer syntaxes will achieve the best compression ratios. RLE Lossless is fast to encode and decode and might be supported by older machines, but the compression ratios achieved are unlikely to be appealing, especially for noisy data

- There is a lot to be told about progressive decoding, which enables users to start viewing some extent of the images before they fully arrive. The usefulness of progressive decoding will depend on the quality of each progressive stage. If deemed insufficient, it will not be useful to the doctors while incurring an overhead. This is another subject where the future looks much more promising than the present, as JPEG XL exhibits great potential in progressive loading.

- If network bandwidth is not a concern but long-term storage is important, the PACS archive may choose to save the DICOM objects in a different encoding with higher compression ratios, and decode it to the requested transfer syntax whenever needed. This naturally constitutes a tradeoff between data size and speed of storage and retrieval. Combined with a glacial storage mechanism, feature-complete archives may use fast compression for new DICOM objects, and in the background transcode older files with slower, stronger compression, thus achieving the best of both worlds.

- Without any of the above, workstations may have to resort to prefetching mechanisms for a satisfying experience. By downloading studies in advance, waiting times are shifted to outside the user’s work hours, thus mitigating the circumstances of low bandwidth. Even in this scenario, compression may be an important strategy for the on-premise disk cache.

Conclusion

The taking point is that compression can make an outstanding difference in user experience, productivity, and hardware costs. This is especially true in digital medical imaging systems, which is why it should not be overlooked when setting up a PACS.

As a PACS administrator or IT staff, you can reach out to your various PACS vendors and ask them for opportunities of data compression in your workflows. In the process, be clear on your storage requirements, such as how much inbound and outbound traffic is produced for medical imaging, for how long images must remain accessible (often forever, although it is not necessarily the case for every PACS), and whether you have users accessing the PACS remotely (telemedicine).

For engineers nevertheless, the time is right for experimentation and construction of proof-of-concept solutions for modern compression methods applied to the medical imaging domain. If your systems are in full control of senders and receivers in the system, consider replacing gzip compression with Zstandard compression. In some scenarios, transcoding PNG or JPEG files to one which is capable of better compression, such as AVIF or JPEG XL, may already be a possibility if you are in full control of intervenient devices. By the time support for such formats are better stabilized in standard browsers, the integration of these new systems into medical workflows can take advantage of that. Notwithstanding interoperability and legality concerns, understanding the potential of modern data compression and undertaking the technical challenges involved will make the foundation for future medical imaging systems to stand on.

Further reading:

- Time for Next-Gen Codecs to Dethrone JPEG by Jon Sneyers.

This piece is by the authorship of PACScenter‘s Lead Developer Eduardo Pinho (PhD).

Stay tuned for more updates by following us on social media: